Introduction to State Knowledge Graph

What is SKG?

I have developed a new concept called the State Knowledge Graph (SKG). SKG is a weighted directed graph where object nodes are connected to state nodes, and state nodes are further linked to other state nodes. This structure indicates that an object can exist in various states and that the connections between these states exhibit correlations. Much like how humans, including simple animals, are sensitive to the state or change of state of things and environments—such as night/daytime, moving/not moving, cold/hot, and alive/dead—an effective SKG captures these nuances in a systematic way.

In recent years, Large Language Models (LLMs) have proven capable of handling a range of language-related tasks. LLMs seem to possess a form of world knowledge similar to human perspectives, and some recent studies even show their capability of creating sophisticated to-do plans for robotic tasks. However, true artificial general intelligence (AGI) should not be confined to generating word tokens of high probabilities. AGI should understand the world as it perceives it: observable states perceived by AGI will be state nodes in SKG, meaning that knowledge will now be paired with actual reality.

Furthermore, since SKG is a directed graph, it leverages knowledge for different solutions by investigating even distant state nodes. For example, SKG might suggest moving to a sunny place for your dog’s weight loss because more sunny days increase your desire to walk with your dog, consequently burning more calories for your dog. Considering that anything and everything in the world is an object with states, I strongly believe SKG is an highly suitable structure for AGI.

Graph Definition

A graph \( G \) is defined as \( G = (V, E) \) where:

- \( V \) is the set of nodes (vertices). In this case, \( V = O \cup F \cup S \).

- \( O \) is the set of objects (e.g., \(\{Robot, AirConditioner, House, Resident\}\)).

- \( F \) is the set of functions (e.g., \(\{Robot\_recharge(), Robot\_turn\_on(), Robot\_go\_to(), Robot\_repair()\}\)).

- \( S \) is the set of states (e.g., \(\{Robot\_busy, Robot\_battery, AirConditioner\_broken, AirConditioner\_on/off, House\_brightness, House\_temperature, Resident\_asleep, Resident\_temperature\}\)). Each state in S has an intensity value between 0 and 1 (i.e., [0,1]). When the intensity is close to 1, the state exhibits maximum intensity, and when it is close to 0, the state has minimum intensity.

- \( E \) is the set of edges, consisting of two types:

1. Unweighted and undirected edges between objects and either functions or states. These edges represent the fact that the objects possess such functions or states.

2. Weighted and directed edges representing the influence of functions on states and the influence of one state on another.

Unweighted and Undirected Edges

The unweighted and undirected edges can be defined as:

\[ E_U = \{ (o, fs) \mid o \in O, fs \in F \cup S \} \]

For example:

\[ \{ (Robot, Robot\_recharge()), (Robot, Robot\_go\_to()), (Robot, Robot\_busy), (AirConditioner, AirConditioner\_on/off), (AirConditioner, AirConditioner\_broken) \} \]

Weighted and Directed Edges

The weighted and directed edges can be defined as:

\[ E_W = \{ (fs, s, w) \mid fs \in F \cup S, s \in S, w \in [-1, 1] \} \]

For example:

\[ \{ (Robot\_recharge(), Robot\_battery, 0.94), (Robot\_turn\_on(), AirConditioner\_on/off, 0.99), (AirConditioner\_on/off, House\_temperature, -0.64), (House\_temperature, Resident\_temperature, 0.51) \} \]

Understanding Weighted and Directed Edges

In the context of weighted and directed edges defined by \( E_W \), it's crucial to understand the implications of the weight (\( w \)) associated with each edge. The weight value, ranging from -1 to 1, indicates the intensity of influence or correlation between functions and states, as well as between states themselves.

- Positive Weight (\( w > 0 \)): A positive weight suggests that the initiating function or state increases the intensity or exhibits a positive correlation with the target state. The closer the weight is to 1, the stronger this positive influence becomes. For instance, an edge with a weight of 0.8 from sunny weather (state) to temperature (state), denoted as \((Weather\_sunny, Temperature, 0.8)\), implies a significant increase in temperature or a strong positive correlation.

- Zero Weight (\( w = 0 \)): A weight of 0 indicates that the effect of the initiating function or state on the target state is negligible or portrays a weak correlation. This suggests that the change in the target state is minimal or non-existent due to the initiating function or state.

- Negative Weight (\( w < 0 \)): A negative weight demonstrates that the initiating function or state decreases the intensity of the target state or has a negative correlation with it. Weights close to -1 imply a stronger negative influence. For example, an edge denoted as \((Weather\_rainy, Dryness, -0.85)\) suggests a considerable decrease in dryness or a strong negative correlation, indicating that rainy weather greatly reduces dryness levels.

By understanding the weight implications, we can better interpret the dynamics and interactions within the graph. Specifically, we gain insights into how certain functions and states influence others, even over long distances within the system.

Example

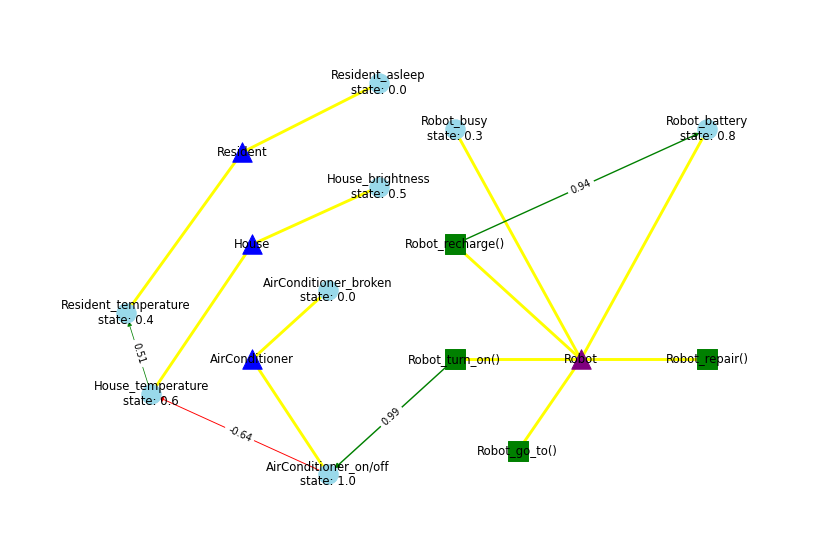

Now that we have defined the graph, let's draw our own example graph.

1. Nodes:

- Objects: \(\{Robot, AirConditioner, House, Resident\}\)

- Functions: \(\{Robot\_recharge(), Robot\_turn\_on(), Robot\_go\_to(), Robot\_repair()\}\)

- States: \(\{Robot\_busy, Robot\_battery, AirConditioner\_broken, AirConditioner\_on/off, House\_brightness, House\_temperature, Resident\_asleep, Resident\_temperature\}\), each with arbitrary current intensities of 0.3, 0.8, 0, 1, 0.5, 0.6, 0, and 0.4, respectively.

2. Unweighted and Undirected Edges:

- \(\{(Robot, Robot\_recharge()), (Robot, Robot\_turn\_on()), (Robot, Robot\_go\_to()), (Robot, Robot\_repair()), (Robot, Robot\_busy), (Robot, Robot\_battery)\}\)

- \(\{(AirConditioner, AirConditioner\_broken), (AirConditioner, AirConditioner\_on/off)\}\)

- \(\{(House, House\_brightness), (House, House\_temperature)\}\)

- \(\{(Resident, Resident\_asleep), (Resident, Resident\_temperature)\}\)

3. Weighted and Directed Edges:

- \(\{(Robot\_recharge(), Robot\_battery, 0.94), (Robot\_turn\_on(), AirConditioner\_on/off, 0.99), (AirConditioner\_on/off, House\_temperature, -0.64), (House\_temperature, Resident\_temperature, 0.51)\}\)

This can be visualized like the following.

Enter Python Code to Generate a Graph

Result:

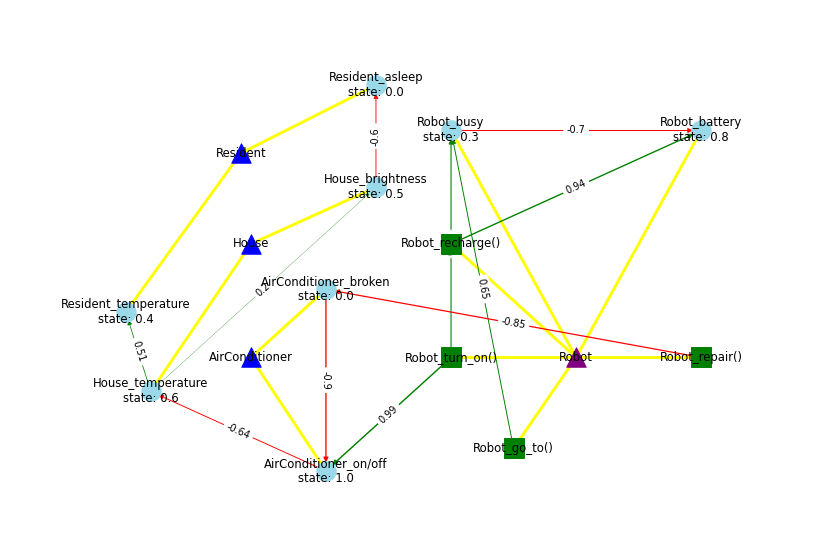

Advantage of SKG 1: expanding connections by leveraging LLMs

Since languages naturally describe objects with states and common knowledge inherently understand cause&effect, it is not difficult for LLMs to analyze SKG and develop it even further.

Let's provide ChatGPT with the SKG of the above example and see how it can suggest possible additional connections between nodes.

Ask ChatGPT

ChatGPT answer

Try it yourself:

1. Remove lines of ChatGPT's previous response between "=== what LLM suggests Start ===" and "=== what LLM suggests End ===" in the following code.

2. Copy and paste a new response generated by you (ChatGPT often gives different answers each time) into the previous response's place.

Enter Python Code to Generate a Graph

Result:

Advantage of SKG 2: achieving robotic tasks by changing states until goal states by leveraging LLMs

Imagine you walk into a room where someone has abandoned the process of making ramen. Regardless of the stage at which the ramen was left, you can assess what has been done and continue cooking it. The underlying concept is that if a robot knows the desired goal states (cooked ramen) and can observe the relevant states, it can adjust its current state to move closer to achieving the goal.

The following is pseudocode for this procedure:

Get the task

Set the goal states for the task

Get the current states

While (the current states ≠ the goal states)

Set the next state for the goal states

Set the sequence of functions for the next state

Do the sequence of functions

Get the current states

This procedure is done by collaboration of Robot (read States and change States) and LLMs (suggest States and Functions):

Let's follow this procedure by considering an example: The robot is given a task of making an iced coffee.

First, let's have LLMs do "Set the goal states for the task".

Ask ChatGPT: "Set the goal states"

ChatGPT answer

Note that your ChatGPT's answer might be different from the below as it often gives different answers each time.

Then, "Get the current states". It should be the actual states of the environment as a robot would currently see. However, as it is a hypothetical example, let's establish an arbitrary environment. Note that numbering Objects (e.g. Cupboard1) is for Robot to distinguish Object from each other (e.g. Cupboard1 is different from Cupboard2).

The current states:

("Jar1_in_Bathroom1", type="state", state=1.0)

("Jar1_contains_IcedWater1", type="state", state=1.0)

("Cup1_on_Cupboard2", type="state", state=1.0)

("Cup1_on_Cupboard1", type="state", state=0.0)

("Cup1_on_Table1", type="state", state=0.0)

("Cup1_contains_Coffee", type="state", state=0.0)

("Cup1_contains_Ice", type="state", state=0.0)

("Cup1_contains_Nothing", type="state", state=1.0)

("Cup1_temperature", type="state", state=0.7)

("Stove1_in_Kitchen1", type="state", state=1.0)

("Table1_in_Kitchen1", type="state", state=1.0)

("Cupboard1_contains_Nothing", type="state", state=1.0)

("Cupboard1_in_Kitchen1", type="state", state=1.0)

("Cupboard2_in_Bathroom1", type="state", state=1.0)

("InstantCoffeePack1_on_Shelf3", type="state", state=1.0)

("Robot_in_Backyard1", type="state", state=1.0)

Now that we've got "the goal states" and "the current states", let's see how LLMs deal with "Set the next state for the goal states" and "Set the sequence of functions for the next state" in the loop of "While (the current states ≠ the goal states)" of our example:

Ask ChatGPT: "Set the next state for the goal states" and "Set the sequence of functions for the next state"

ChatGPT answer

Note that your ChatGPT's answer might be different from the below as it often gives different answers each time.

Now we've got "The next State" ("Robot_in_Bathroom1", type="state", state=1.0) and "Sequence of Functions" (Robot_go_to(Bathroom1)) suggested by LLMs. Remember that "The next State" of the environment can be achieved by "Sequence of Functions" of Robot.

Therefore, it is time for Robot to take care of "Do the sequence of functions" (actions) and "Get the current states" in the while loop.

However, it is a hypothetical example without a physically existing robot, so let's assume the robotic behaviors have successfully fulfilled "Do the sequence of functions" so that "The current States" has reached "The next State". Also, we need to assume our hypothetical robot, after "Do the sequence of functions", has done "Get the current states" to reflect the adjusted states ("The next State").

As we did before at the previous "Get the current states", let's update "The current States" manually to reflect "The next State".

The current states:

("Jar1_in_Bathroom1", type="state", state=1.0)

("Jar1_contains_IcedWater1", type="state", state=1.0)

("Cup1_on_Cupboard2", type="state", state=1.0)

("Cup1_on_Cupboard1", type="state", state=0.0)

("Cup1_on_Table1", type="state", state=0.0)

("Cup1_contains_Coffee", type="state", state=0.0)

("Cup1_contains_Ice", type="state", state=0.0)

("Cup1_contains_Nothing", type="state", state=1.0)

("Cup1_temperature", type="state", state=0.7)

("Stove1_in_Kitchen1", type="state", state=1.0)

("Table1_in_Kitchen1", type="state", state=1.0)

("Cupboard1_contains_Nothing", type="state", state=1.0)

("Cupboard1_in_Kitchen1", type="state", state=1.0)

("Cupboard2_in_Bathroom1", type="state", state=1.0)

("InstantCoffeePack1_on_Shelf3", type="state", state=1.0)

("Robot_in_Bathroom1", type="state", state=1.0) #added

("Robot_in_Backyard1", type="state", state=0.0) #modified

Now we have finished the first loop of "While (the current states ≠ the goal states)". And as you can see, it is indeed "the current states ≠ the goal states" because the only thing our robot did was move to Bathroom1 from Backyard1.

But you've got the point: what Robot does is to adjust one State (or States, if changing one affects/requires multiple States) at a time (one iteration in the "While loop") by using its Functions (actions). Robot will repeat adjusting states until the goal states are met. For that, LLMs suggests what should be the next states based on the current states and suggests what sequence of functions the Robot should do to get to the next states. As above, LLMs suggested "("Robot_in_Bathroom1", type="state", state=1.0)" as The next State (presumably to get Cup1 on Cupboard2 in Bathroom1) to get close to The Goal states. Also note that it correctly suggested "Robot_go_to(Bathroom1)" as Sequence of Functions to fulfill the next state.

Let's repeat the "While loop" by using LLMs until "the current states = the goal states" (note that as above, The current States is updated manually by considering the effect of The next State):

ITERATION: 2

*** Set the next state for the goal states / Set the sequence of functions for the next state ***

The next State:

("Cup1_on_Table1", type="state", state=1.0)

Sequence of Functions:

Robot_go_to(Cupboard2)

Robot_grab(Cup1)

Robot_go_to(Kitchen1)

Robot_grab_release_thing1_at_thing2(Cup1, Table1)

*** Do the sequence of functions / Get the current states ***

Sequence of Functions: Fully completed by Robot.

The current States:

unchanged states omitted...

("Cup1_on_Cupboard2", type="state", state=0.0) #modified

("Cup1_on_Table1", type="state", state=1.0) #modified

("Robot_in_Kitchen1", type="state", state=1.0) #added

("Robot_in_Bathroom1", type="state", state=0.0) #modified

ITERATION: 3

*** Set the next state for the goal states / Set the sequence of functions for the next state ***

The next State:

("Cup_contains_coffee", type="state", state=1.0)

Sequence of Functions:

Robot_go_to(Shelf3)

Robot_grab(InstantCoffeePack1)

Robot_go_to(Table1)

Robot_make_thing1_contain_thing2(Cup1, InstantCoffeePack1)

*** Do the sequence of functions / Get the current states ***

Sequence of Functions: Fully completed by Robot.

The current States:

unchanged states omitted...

("Cup1_contains_Coffee", type="state", state=1.0) #modified

("Cup1_contains_Nothing", type="state", state=0.0) #modified

("InstantCoffeePack1_on_Table1", type="state", state=1.0) #added

("InstantCoffeePack1_on_Shelf3", type="state", state=0.0) #modified

("InstantCoffeePack1_contains_Nothing", type="state", state=1.0) #added

ITERATION: 4

*** Set the next state for the goal states / Set the sequence of functions for the next state ***

The next State:

("Jar1_in_Kitchen1", type="state", state=1.0)

Sequence of Functions:

Robot_go_to(Bathroom1)

Robot_grab(Jar1)

Robot_go_to(Kitchen1)

*** Do the sequence of functions / Get the current states ***

Sequence of Functions: Fully completed by Robot.

The current States:

unchanged states omitted...

("Jar1_in_Kitchen1", type="state", state=1.0) #added

("Jar1_in_Bathroom1", type="state", state=0.0) #modified

("Jar1_grabbed_by_robot", type="state", state=1.0) #added

ITERATION: 5

*** Set the next state for the goal states / Set the sequence of functions for the next state ***

The next State:

("Cup1_contains_Ice", type="state", state=1.0)

Sequence of Functions:

Robot_make_thing1_contain_thing2(Cup1, IcedWater1)

*** Do the sequence of functions / Get the current states ***

Sequence of Functions: Fully completed by Robot.

The current States:

unchanged states omitted...

("Jar1_contains_IcedWater1", type="state", state=0.8) #modified

("Cup1_contains_Ice", type="state", state=1.0) #modified

("Cup1_temperature", type="state", state=0.1) #modified

ITERATION: 6

*** Set the next state for the goal states / Set the sequence of functions for the next state ***

The next State:

("Jar1_grabbed_by_robot", type="state", state=0.0)

Sequence of Functions:

Robot_grab_release_thing1_at_thing2(Jar1, Table1)

*** Do the sequence of functions / Get the current states ***

Sequence of Functions: Fully completed by Robot.

The current States:

unchanged states omitted...

("Jar1_on_Table1", type="state", state=1.0) #added

("Jar1_grabbed_by_robot", type="state", state=0.0) #modified

ITERATION: 7

*** Set the next state for the goal states / Set the sequence of functions for the next state ***

The next State:

The Task/The Goal states have been met. Task achieved.

Sequence of Functions:

*** Do the sequence of functions / Get the current states ***

Sequence of Functions:

The current States:

The while loop is finished because the current states meet "the goal states":

Note that LLMs suggested The next State based solely on "The current States" to get closer to The goal States just like we humans can continue cooking a ramen at any stage.

We can conclude that if Robot can read necessary States and is equipped with necessary Functions, it can reach to the goal states one state-change at a time suggested by LLMs.

Following Questions: obstacles

Too many states to consider: The current states were not too many (Jar1_in_Bathroom1, Jar1_contains_IcedWater1, ..., Robot_in_Backyard1) because it was the hypothetical example based on the cherry-picking of States. In reality, an environment would be filled with many Objects having many States such as location, temperature, opened/closed, color and so forth. The fact that an environment like a household has many Obejcts going on would be a problem for LLMs because there is a token limit in LLMs, which means LLMs can consider only so many states to suggest "the next state". Therefore, getting selective States relevant to a task will be important. As solutions, examining weights of States (especailly the weights connected to "the goal states") in SKG or deciding early on which Objects should be involved for a task or reviewing successful previous tasks (in search of equivalent Objects and States) could be considered.

Infinite while loop: Theoretically, it is not impossible that "the current state" never meets "the goal states". For example, the goal states ("Bowl_contains_ice", type="state", state=1.0) cannot be met if the bowl and the ice in the fridge are too far away from each other and the Robot decides every time to bring the ice to the bowl instead the bowl to the ice because the ice would melt before reach to the bowl. For another example, Robot might keep cleaning an attic over and over again by moving boxes to different corners while leaving other rooms completely alone when the task is to clean an entire house.

Advantage of SKG 3: Generalized knowledge of Objects by States

If we encounter freshly baked bread, we soon notice that it is something to eat. Our mouth produce more saliva and we feel hungry.

How do we know that some objects are food and some are not? Or how do we know what things will help us with hunger? Not only the image of it but other States of food such as texture, smell, and taste all tell us that it is food. Eventually, we will experience and learn these kind of things help with hunger.

When we think of food, we think of such States. And we know that things with such States will be good for someone's hunger.

Leveraging SKG, we can train the Robot as we obtain the general knowledge of relation between food and people: a State (consumed) of some Objects with certain States (food-like shape, smell, texture, taste, and so forth) can change a State (hunger) of some Objects with certain States (people-like shape, aliveness, warmth, and so forth). In other words, we are trying to train Robot so that when it is given the specific pair of States (e.g. the positive connection between "consumed" and "hunger"), it will know what Objects (e.g. food-like thing and people-like thing) likely would satisfy such connection.

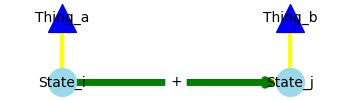

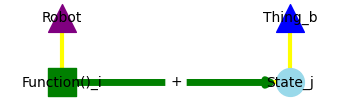

Definition of "Generalized knowledge of Objects by States":

1. Given a pair of specific States with its sign and direction of the connection, knowing that what Objects likely would satisfy such connection.

2. Given a pair of a specific Function and a State with its sign and direction of the connection, knowing that what Objects likely would satisfy such connection.

Note that an Object is denoted in terms of the relation of a specific pair of States such as (+ Consumed, Satiety). It is because the Robot does not have a concept of food but can be trained to understand what kind of Thing (Objects) would likely satisfy "(+ Consumed, Satiety)". Then, the Robot might give such Objects a name as Food.

Now that we have defined necessary notions, let's train the Robot to possess such knowledge. For example, learning what Objects likely are "(+ Consumed, Satiety)".

Training process : Deep learning

How is the Robot supposed to tell (+ Consumed, Satiety) from other Objects which are not food? We can start from the fact that Objects of "(+ Consumed, Satiety)" would share certain features (States) such as food-like shape, smell, texture, taste, and so forth. Therefore, we are training the Robot based on this fact that Objects with certain States (e.g. "food-like smell and taste") would satisfy a certain relation of two States (e.g. "(+ Consumed, Satiety)").

Suppose that the number of observable States (every type of State: shape, color, temperature, smell, texture, brokeness, asleep, brightness, and so forth) that an Object could possibly possess is n. Note that the same number "n" applies to any Object because "n" is the number of all types of States that the Robot can observe on an Object and States do not have to show high values (e.g. Food_asleep state would have a low value). Then, the total number of a pair of States would be n^2. Therefore, a specific pair of states can be denoted as (State_i, State_j, ± weight) where 1 ≤ i,j ≤ n and a postive sign indicates State_i affects State_j with a postive weight while a negative sign with a negative weight.

Let's define (± State_i, State_j) as a Giver (the Object that owns State_i) which would satisfy the specific pair of states (State_i, State_j, ± weight) where the sign of "± State_i" indicates the sign of "± weight" and (State_i, ± State_j) as a Receiver (the Object that owns State_j). Note that the sign of a Giver and a Receiver should be same as + or -.

For example, let's consider a pair of States (Consumed, Satiety, +). Then, (+ Consumed, Satiety) will be a Food-like thing (a Giver) and (Consumed, + Satiety) will be an Animal-like thing (a Receiver).

However the Robot does not know what is the Food-like thing or the Animal-like thing yet. It only can read the States of a Giver or a Receiver.

Let's train a model for the Robot to tell what Objects are the Food-like thing. As Food-like things share certain States in common like smell, taste, texture, and so forth, we can train the Robot to tell what Object would be (+Consumed, Satiety).

Let's assume that Robot has enough experiences (or many memories) of a Giver that was Consumed and affected Satiety of another Object (a Receiver) with a positive weight, a negative weight, or little weight (the collection of these weights will be y. Also note that y will be [-1,1] as defined at "Graph Definition") that there is enough dataset of (State_1, State_2, ... , State_n) of a Giver right before it was Consumed (i.e. Consumed State value is low yet. Note that it also prevents "Consumed" from being a prominent feature among other States). As introduced previously, each State has its own normalized value (e.g. "House_brightness state: 0.5"). Therefore, it can be converted to (Value_1, Value_2, ... , Value_n) where Value_k indicates the value of State_k.

Now that we have X = a set of (Value_1, Value_2, ... , Value_n) and y is from a set of weights of (Consumed, Satiety, ± weight), we can train our deep learning model.

The same learning process could apply to Receiver (i.e. (State_i, ± State_j) or (Function()_i, ± State_j)). X will be from the States of the Objcet that owns State_j while y will be from the weights between "State_i and State_j" or between "Function()_i and State_j".

What exactly has the Robot learned? It has learned an Object with what States would likely be a Giver or a Receiver for a certain relation between "State_i and State_j" or between "Function()_i and State_j". For example, it now knows what is Food-like thing from its States like smell, taste, and so forth.

How could this knowledge be beneficial? First, the Robot now can understand Objects in their relations: certain Objects would affect certain Objects by changing their certain State. Second, these certain Objects are detectable by reading their States so that the Robot can see the similarity between Objects. For example, when there is need for an Object that can be used for hitting a nail into wood. It can consider using a rock when there is no hammer because it can read/analyze the States of the Rock with the trained model for (used for hitting a nail, a nail installed, ± weight).

It also gives a hint that why we are curious animals. Our ancestors often have tried to use many things many different ways (and learned new ways!) and this quality has proven to be beneficial.

Difficulties of Training

We have conculded that the Robot can learn what kind of Objects would satisfy the relation of a certain pair of States. However, there are quite a number of cases ("n^2") for a possible pair of States and many of them are not even meaningful nor useful (e.g. (Hair_wet, Satiety)). Then what pair of States should we choose to train the Robot with and where to get large enough samples/examples of such Objects?

1. The simulation could be considered: Imagine the simulation like Matrix the movie where things are as real to the Robot as in reality. Of course, it would be difficult or impossible to build a such environment but the point is that the more realistic the environment is for a realistic reward which was to survive for our ancestors, the more meaningful/useful the relation of States and related Objects that the Robot learns would be. Note that most of our (human's) "Generalized knowledge of Objects by States" is directly/indirectly meaningful/useful for surviving. However more affordable/possible simulation would be something like NVIDIA Isaac Gym where the Robot will be trained for specific States and Objects for a specific reward (e.g. a reward for telling correctly what kind of Objects could be food).

2. Observing people and changes of States in the real world: people change States of Objects everyday because it is meaningful/beneficial for us living in our environment. Learning by observing how a large number of poeple (more than 8 billion possible samples) interact with certain Objects to change certain States and how such States affect another could help the Robot to learn human's "Generalized knowledge of Objects by States".

3. LLMs-generated samples

Advantage of SKG 4: Learning abstract things and Superstition (Not Finished)

How do we understand abstract concepts such as kindness, friendship, or love? We can get closer to the answer by asking another question: what benefits have humans gained by understanding abstract concepts?

One obvious benefit, directly or indirectly related to survival, is that understanding useful concepts allows us to predict future states and prepare for a better future.

Let's take some examples.

- Example 1) Predicting a less abstract concept, more like the laws of nature that our ancestors had to interact with every day: falling or staying in the air. Our ancestors could benefit from predicting the next spatial position of moving objects, for example, to hunt animals more effectively.

In this sense, let's assume that predicting whether Object_k will stay in the air or fall to the ground was of great interest to our ancestors. The input and ouput for the prediction could be:

+---------------------------------------------------------------------------------------------------------+

| Input (Current Objects/States) | Output (Future Objects/States) |

+---------------------------------------------------------------------------------------------------------+

| Object_1 (State1, State2, ...) | ("Object_k_in_the_air", type="state", state=1.0)//float in the air |

| Object_2 (State1, State2, ...) | OR |

| ... | ("Object_k_in_the_air", type="state", state=0.0)//fall to the ground |

| Object_k (State1, State2, ...) | |

| ... | |

+---------------------------------------------------------------------------------------------------------+

There were likely many Objects and States involved when they tried to predict the output. Obviously, focusing on random features such as the color of Objects would not help much with the prediction, and the learning process would be very slow.However, consider a reasoning process we have all experienced in our lives: we focus on the differences in Objects/States from our memories (experiences). For example, if it is difficult to make a fire while camping, we quickly try to compare what is different from the last time (is it because the wood is wet this time, whereas it was dry last time? Is it because the wind is too strong now, whereas it was weak before?).

This method could be applied as follows.

Memory for Object_k_in_the_air-------------------------------------------------------------------

What thing was Object_k?

list_what = [Thing_1, Thing_2, ...] = e.g. [ 0, 1, 0, 0, 1, 0 ... ] (Note that 1 means that Object_k falls under the category of such Thing) (Note that Thing is Category of Objects sharing certain States such as Food. Also note that Object can be included in multiple Things (e.g. Milk is Food and Liquid).)

What things were there?

list_where = [Thing_1, Thing_2, ...] = e.g. [ 1, 1, 0, 1, 1, 0 ... ] (Note that 1 means that such Thing was there with Object_k)

How were Object_k's States?

list_how = [State1, State2, ...] = e.g. [ 0.2, 0.1, 0.9, 0.5 ... ] (Note that State has a float value between 0 and 1. Also note that State could be from interaction with another Object/States (e.g. "Jar1_grabbed_by_robot").)

list_whatHapp = function_concatenate (list_what, list_where , list_how) = [ 0, 1, 0, 0, 1, 0 ... 1, 1, 0, 1, 1, 0 ... 0.2, 0.1, 0.9, 0.5 ... ]

result = e.g. 1 (Note that 1 means Object_k stayed in the air, 0 means it fell to the ground)

-----------------------------------------------------------------------------------------------------

As more data are collected with more experience and examples, we can calculate the average of list_whatHapp regarding the result.

list_avg_true = function_mean_value_of_each_element (list_whatHapp_true1, list_whatHapp_true2, list_whatHapp_true3, ...) = [ avg_true1, avg_true2, avg_true3, ...] (Note that "_true" denotes that the mean value is from lists where the result was 1 (i.e. Object_k stayed in the air))

list_avg_false = function_mean_value_of_each_element (list_whatHapp_false1, list_whatHapp_false2, list_whatHapp_false3, ...) = [ avg_false1, avg_false2, avg_false3, ...] (Note that "_false" denotes that the mean value is from lists where the result was 0 (i.e. Object_k fell to the ground))

Then we can measure intensity of difference which likely made a different result:

list_diff = function_subtract_each_element_and_square (avg_true, avg_false) = [ (avg_true1 - avg_false1)^2, (avg_true2 - avg_false2)^2, (avg_true3 - avg_false3)^2, ...] = [ diff1, diff2, diff3, ... ]

Then we can create a mask as follows:

next_high_diff = function_find_index_of_maximum_value (list_diff) = e.g. 4 (Note that it means "diff5" has highest value among other diffs, as index starts from 0)

list_mask = [ 0, 0, 0, 0, 1, 0, ... ] (Note that except for the index of "diff5", all other elements are 0) (Note that list_mask has the same length as list_whatHapp)

Next, we can apply the mask to the dataset of "list_whatHapp":

X = { function_multiply_each_element (list_whatHapp, list_mask) } = e.g. { [ 0, 0, 0, 0, 1, 0, ...], [ 0, 0, 0, 0, 0.8, 0, ...], ... } (Note that each fifth element is unmasked)

Finally, y is derived from each result of "Memory for Object_k_in_the_air".

Then, the input and ouput for training would be:

+------------------------------------+ | Input | Output | +------------------------------------+ | X | y: result (1 or 0) | +------------------------------------+If the prediction performance is poor by the model trained above, we can include the index of the next highest diff into "list_mask" and re-train the model:

list_mask = e.g. [ 0, 1, 0, 0, 1, 0, ... ] (Note that it means "diff5" > "diff2" > any other diff)

Next, we can apply the mask to the dataset of "list_whatHapp":

X = { function_multiply_each_element (list_whatHapp, list_mask) } = e.g. { [ 0, 0.3, 0, 0, 1, 0, ...], [ 0, 0.7, 0, 0, 0.8, 0, ...], ... } (Note that each second element is unmasked as well as fifth one)

Then, check the prediction performance.

If the prediction performance is poor by the model trained above, we can include the index of the next highest diff into "list_mask" and re-train the model:

(repeat above)...

We stop updating the mask when the trained model's prediction is sufficiently accurate and save both the mask and the prediction model into memory.

Now, Robot with enough experience with Objects/States that have been observed for prediction (whether stay in the air or fall) encounters Object_k and tries to predict whether Object_k will fall to the ground or not:

1. Retrieve the mask and the model from the memory

2. Apply the mask to Current Objects/States

3. Feed the masked Input into the model to get the prediction

It can be expected that if Object_k is a bird (the mask on "list_what", i.e., Object_k falls into the category of birds) or something with wings (the mask on "list_how", i.e., Object_k has a state ("Object_k_has_wings", type="state", state=1.0)), the model would predict that Object_k would stay in the air. This is because the observed different results from different features (birds/things with wings stayed in the air while most other things fell) have been incorporated into the mask, and the mask has emphasized such features (including being a Thing under the category of birds and having a high value on the State ("Object_k_has_wings")) for training the prediction model.

Note that such different features, which lead to different results, are what we humans often see as reasoning. For example, consider how natural the following sounds to us: "Why do birds or bats not fall to the ground? Because they have wings."

- Example 2) Predicting a more abstract concept: kindness. Our ancestors could benefit from predicting who to trust and depend on. For example, establishing a competitive organization where people treat and care about each other fairly by choosing members selectively.

In that sense, let's assume predicting (or more accurately, figuring out in this case) whether Object_k is a kind person or what a kind person would do in certain situations was of great interest to our ancestors.

(to be continued)

Let's revisit the question "How do we understand abstract concepts?". Let's assume we are asked to explain kindness. We might find it not easy and somewhat ambiguous to define exactly what kindness is, while its formal definition is "the quality of being friendly, generous, and considerate." However, we would find it quite easy to talk about what are the examples of kindness (e.g., giving food to hungry people, helping friends with cooking, etc.). This suggests that we understand kindness (an abstract concept) by experiencing examples (Objects/States) that can be predicted or considered as kind acts or not.

Note how common and universal the expression "for example, ..." is in almost every language. No matter what abstract concepts they are, they can be elaborated and made tangible to understand with the help of "for example, ...".

--invented mask & Objects/States--

How do we quickly understand video games and play them.

--the feeling of "well, that makes sense"--

--how to connect "concepts" with language/image--

Further discussions

- Advantage of SKG 5: Training in the simulation

- Advantage of SKG 6: categorized States based on a task

- Advantage of SKG 7:

- Advantage of SKG 8:

- Advantage of SKG 9:

- Advantage of SKG 10:

- Advantage of SKG 11:

- Advantage of SKG 12: detecting potential danger